Credit: Yoshiyuki Yatsuda

STAIR Lab. (JP) collaborating with Surface & Architecture Inc, Kyoko Kunoh, Tomohiro Akagawa, Tanoshim Inc., mokha Inc. and Tokyo Studio Co. Ltd. (JP)

The latest AI research makes it possible to teach computers the names of things by showing them many examples. The key is a large amount of training data and deep learning software. By leveraging this, the artists have developed an AI capable of classifying 406 kinds of flower by using over 300,000 flower pictures.

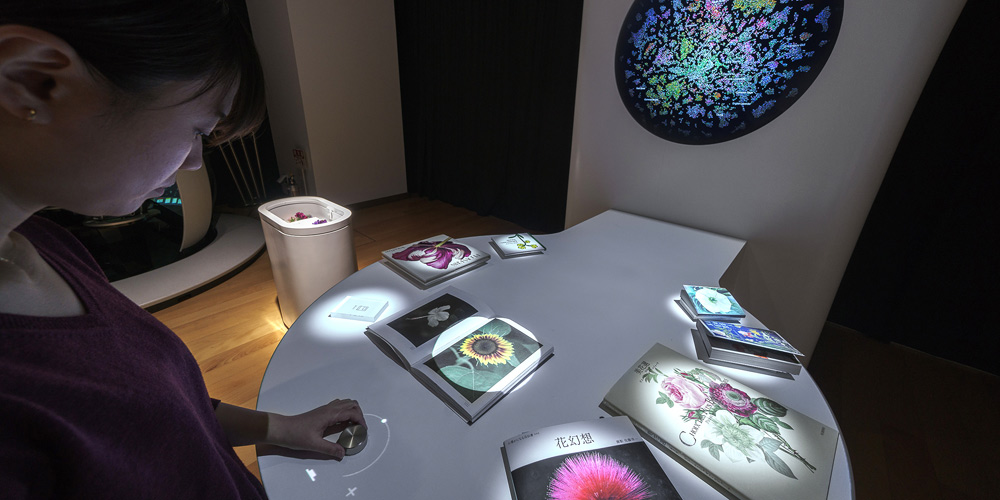

hananona is an interactive work that visualizes how AI classifies a flower. When it sees a flower, it identifies its name and shows its class on a visual “flower map”—a visualization of the inside of the AI brain. This is a group of image clusters, each of which is a cluster of flower photos learned as belonging to the same class. By looking at them, users can see how AI classifies the flowers.

Users are encouraged to challenge hananona with their own flower photos, or with other materials such as pictures, paintings, flower-like objects etc. so that they can observe how the AI reacts to different abstraction levels of flowers.

Credits

STAIR Lab., Chiba Institute of Technology

Creative direction, design: Surface & Architecture Inc.

Art direction: Kyoko Kunoh

Interaction design, programming: Tomohiro Akagawa

Programming: Tanoshim Inc.

Server programming: mokha Inc.

Furniture production, site setup: Tokyo Studio Co., Ltd.