Supasorn Suwajanakorn (TH), Steven Seitz (US), Ira Kemelmacher-Shlizerman (IL)

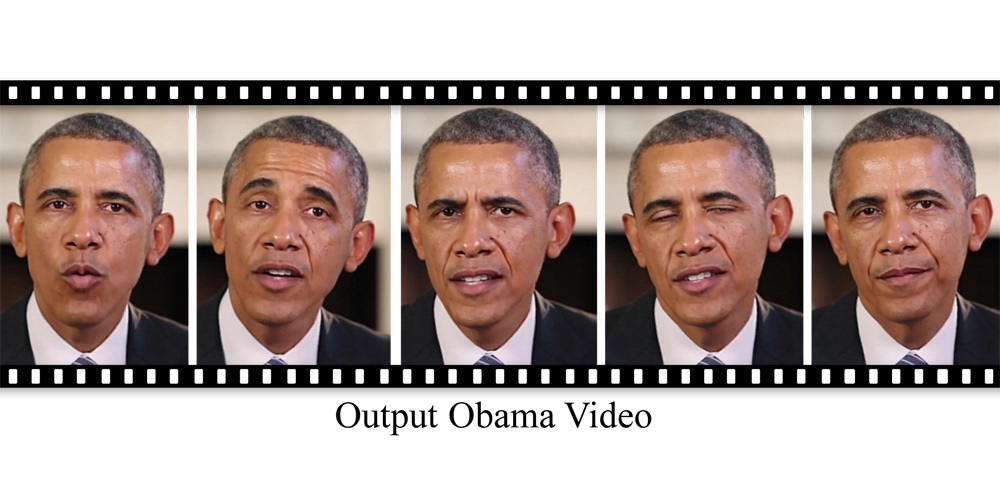

Given audio of President Barack Obama, the scientists synthesize a high-quality video of him speaking with accurate lip sync, composited into a target video clip.

Trained on many hours of his weekly address footage, a recurrent neural network learns the mapping from raw audio features to mouth shapes. Given the mouth shape at each time instant, the artists synthesize high-quality mouth texture, and composite it with proper 3D pose matching to change what he appears to be saying in a target video to match the input audio track. This approach produces impressive photorealistic results.

Credits

GRAIL Lab @ University of Washington