Radical Atoms: Beyond Tangible Bits

Prof. Hiroshi Ishii, Dr. Jean-Baptiste Labrune, Dr. Hayes Raffle, Dr. Amanda Parkes, Leonardo Bonanni, Cati Vaucelle, Jamie Zigelbaum, Adam Kumpf, Keywon Chung, Daniel Leithinger, Marcelo Coelho and Peter Schmitt (MIT Media Laboratory)

http://tangible.media.mit.edu/project.php?recid=129

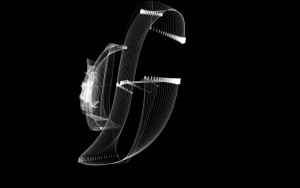

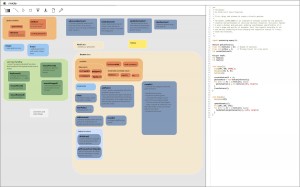

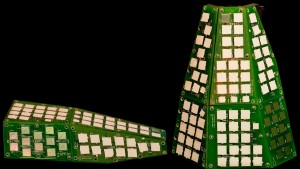

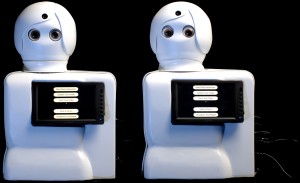

Radical Atoms is our new vision-driven design research on interactions with futuristic physical material that can 1) conform to structural constraints, 2) transform structure & behavior, and 3) inform new abilities. Tangible Bits was our vision introduced in 1997 for seamless coupling of bits and atoms by giving physical form to digital information and computation. This Tangible Bits vision guided our series of Tangible User Interface designs such as inTouch, curlybot, topobo, musicBottle, Urp, Audiopad, SandScape, PingPongPlus, Triangles, PegBlocks, and I/O Brush that were exhibited in the Ars Electronica Center in 2001-2004 and other venues. However, we realized a fundamental limitation of Tangible Bits.

Bits in the form of pixels on a screen are malleable, programmable, and dynamic, while atoms in the form of physical matter are static and extremely rigid. This fundamental gap between bits and atoms makes it very difficult to synchronize the digital state and physical state of information we want to represent and control.

Radical Atoms addresses this limitation of Tangible Bits, proposing interaction designs with a tobe-invented physical material that we can “forge” to conform, transform, and inform. This video presents early design sketches of Radical Atoms to go beyond Tangible Bits, and to make atoms dance as bits (pixels) can do today.

CREDITS: Thanks to Neal Stephenson, Chris Bangle, Banny Banerjee, Chris Woebken, Jinha Lee and Xiao Xiao for their discussion and contribution. Thanks also to the Tangible Interfaces class taught in Fall 2008 at the MIT Media Laboratory.